Search engine optimization (SEO) is building or improving websites that appeal to search engine users and search engine robots. There are technical factors to consider for robots and experience factors to consider for people. SEO is often a consultative effort with developers and designers responsible for building a website and writers responsible for content. The result of properly implemented SEO recommendations on a website is more users arriving from search engines (organic search traffic).

This article will talk a lot about Google, because they own ~92% of the Search Engine Market Share Worldwide. Ranking well in Google usually translates to ranking well in other search engines, like Yahoo, Bing, or DuckDuckGo. There may be some minor differences if you want to rank well in international search engines like Yandex (Russia), Baidu (China) or Naver (South Korea).

Which factors are important for search ranking?

Starting from the top, these are in order of need.

- Accessibility for search engines

- Unique Content

- Links (Internal and External)

- Keyword / Topic focus

- User Intent

- User Experience (device compatibility, speed, UI)

- Fresh content

- Click-through rate (CTR)

- Expertise, Authoritativeness, Trustworthiness (EAT)

- Search engine guidelines (don’t break the rules)

SEO and how search engines work

Robots

A search engine robot is a software program that “crawls” webpages by following links. The “bot” collects (or indexes) the code on the page and the connections (links) between pages. The structure of words and code on a page gives context to the information gathered.

SEO requires understanding the perspective of a search engine robot. In other words, how does a web crawler view the entirety of a website or domain? What metadata is on the pages? How are the pages linked together? Taking it further, consider how a search engine views the entire collection of websites on the Internet.

The best way to understand how web crawlers work is to use one yourself! There are several popular options, both software installed locally (Screaming Frog, Sitebulb, Xenu’s Link Sleuth) and cloud-based (Botify, DeepCrawl, OnCrawl). Many SEO tools (Moz, Ahrefs, SEMrush, etc) use bots to crawl the internet to build their datasets.

People

Google says, “Make pages primarily for users, not for search engines.” This is their #1 principle, and it makes sense. Empathy is the key. It’s important to consider the frame of mind, intent, and the overall experience that people have when they search the Internet. Google’s search product is only popular because it delivers a high-quality content experience. If a page isn’t helpful or delightful to a person, then it will not rank well in a modern search engine.

That’s basically all you need to know! (But keep reading if you want to know the nitty-gritty.)

Algorithms

Search engines use proprietary algorithms (lots of baby algorithms, working together) to rank search results based on the quality and relevance of the resource page to the search query. You can study patents, and announcements and expert opinions, but Google keeps it's algorithms a closely guarded secret.

SEO factors can be broadly categorized as on-page or off-page efforts. On-page factors are things a website owner can control, like their own website or other owned properties like business directories and social profiles. Off-page factors are external signals found on websites or databases that influence search rankings, like external links from other websites, sentiment, or behavioral data collected.

1. Accessibility

First and foremost, search engines (SE) need to access a website and all of its pages. If a SE crawler cannot find and index a page, there's no chance for ranking in search results. It needs to be easy to understand what a page is about. There are several ways that a search engine crawler might have difficulty accessing or understanding a page:

- Robots.txt disallow

- Meta tags (noindex, nofollow)

- Error status codes (non 200 status)

- Incorrect canonical tags

- Non-indexable content (javascript / flash / video)

- Crawl budget

- No links to a page

Robots.txt lives at the root of your domain (domain.com/robots.txt). It tells robots (AKA bots, web crawlers, spiders) which pages they can and cannot crawl. Disallowing a page does not exclude it from a search engine index. Disallowed pages can still show up in search results if discovered via other signals like links. Most bots will follow these directives, although they are not forced to. Major search engine bots like googlebot or bingbot should always follow the rules. You can test if pages are accessible with Google's Search Console robots testing tool (requires GSC account).

A meta robots noindex tag will exclude a page from the search index. This tag tells a web crawler to not include the page in their search index, so it won't be returned in search results. Noindex pages are still crawlable and still pass link value through the page. Some recent statements by Google spokespeople have indicated that pages with noindex tags on them may get crawled less frequently over time to the point of not being crawled.

Status codes. A 200 OK status code is the server telling the requesting machine that the request was successful. A page must return this status for a search engine to crawl and index it. Other codes include 3xx (redirection codes like 301, 301 and 307), 4xx (error codes like 404, 410) and 5xx (server errors like 500, 503, 504). Each family of status codes includes several codes for slightly different situations. Don’t serve a 200 or a 301 on a not found page, serve the proper status, 404 not found.

Canonical tags tell web crawlers which duplicate page is the correct URL to index. If the canonical tag is not correct, it will cause confusion for the search engine crawler. Canonical tags are “hints” for Google, rather than directives, so they may use other signals to choose a canonical if there is confusion. Find more about causes of duplicate content below in the Unique Content section below.

If content depends on client-side rendering processes (javascript, flash) or is not in text form (image, video, audio), web crawlers have difficulty understanding it. Modern search engines, like Google, can process and render javascript but it takes a tremendous amount of resources to do this at scale (the entire Internet). Due to the resources it takes, rendering javascript is done as a separate process, in a prioritized queue. The page must be considered an important, high quality page to be prioritized for rendering. The optimal way to serve search engine crawlers a page is to render all the html on the server side, before it gets to a client machine (a human user’s computer or a web crawler).

Crawl Budget / Crawl Rate

Crawling every website on the Internet is a massive task requiring a lot of computing resources, so Google must prioritize or budget how they spend resources each day. Crawl rates are set for each website depending on size, speed, popularity, and how often it changes. More pages might be crawled if they are faster to download or if the pages are very popular. Google will crawl fewer pages over time if the pages crawled are of low quality or not useful to return for search results. If googlebot encounters a lot of errors or redirection while crawling, this can negatively impact crawl rate.

When a search engine has difficulty crawling a site or is only consuming low quality pages, it can make the site appear to be lower quality overall and negatively affect all rankings on the domain.

No links to a page

A search engine web crawler won’t find a page unless they find a link to that page. If the links are few and far between, or buried deep in a site, that linked page may never be found. Find out more about improving linking and site structure in the Links section below.

2. Unique Content

If the same page or mostly the same page can be found at a different URL (any small difference), it is considered a duplicate page. Search engines identify the best or most original result and filter out other duplicate results. Quite a lot of duplicate content is created on the Internet both intentionally (stealing, copying, and syndicating content) and unintentionally (CMS setup, domain/network settings).

A few common, unintentional duplication issues are:

- Query parameters / UTM codes / tracking codes

- Different domains or subdomains pointing to the same pages (non-www vs. www versions)

- Protocol (https vs. http)

- URL structure (trailing slash vs not)

- Varied capitalization

The issues mentioned above result in slight variations to a URL that might still load the same page. The best way to fix duplicate pages is redirecting to the canonical version. The canonical tag is also recommended; this tells the search engine to consolidate value on the canonical URL. Google filters out duplicate URLs; they are not penalized. If duplicate URLs are not managed correctly, link value can be split between different variations of the URL.

Google is very good at determining the original publisher of content. If your entire website is made up of content copied from other sources, you will find it difficult to achieve any search rankings. Search engines have the tools to identify near-duplicates, like copying short sections of text from a variety of sources (shingling) or slightly re-wording existing content (spinning). Tactics that involve duplicating existing content are not going to work well. Create something unique (and valuable) if you want to earn search engine traffic.

3. Links (Internal and External)

Google changed the search engine game by ranking pages by popularity (links pointing to them) rather than simplistic measurements of keyword occurrence on a page. Larry Page, Google co-founder, developed a scoring system called PageRank that measures a webpage’s importance by the number of links pointing to it around the world wide web. Each page builds up value (aka page value, search value, link value, link juice, page authority) determined by the quantity and quality of links pointing to the page.

Link quality is measured by:

- Page authority of the linking page

- Relevance

- Trust

- Placement on page, context

- Anchor text

- Number of other links on the page

- nofollow

Link value is recursive because the authority of a page is largely determined by the quantity and quality of links pointing to it. In other words, authoritative pages have other authoritative pages linking to them.

Link relevance is important in 2 ways: 1) The relevance of the linking page to the link destination, and 2) maintaining the relevance of a page over time in relation to links pointing at it. If a site owner radically changes or removes a page, existing links pointing to it will lose all value because the relevance is obliterated.

Trust can be measured by how close a domain is to a set of trusted domains, level of badness detected on a domain, and user sentiment in reviews found on other sites.

The placement of a link on a page may determine its relative value (footer = low; repeated link box on every page = low; contextual link within article = high; list of related links = medium; links in main navigation = helps indicate site structure or organization).

Anchor text is the text that is linked. These keywords help a search engine better understand what a linked page is about.

The number of links on the page matters because the page value is split between all the links on the page. If a page has a theoretical value of 6 and has three links on it, then a value of 2 (minus a dampening factor) flows through each link to the destination page. PageRank is monumentally more complex than that, but this is a simple way to understand the basic concept.

Nofollow is a meta tag that tells search engines not to trust the link, which means that link value will not flow through it. Google recently modified their guidance on nofollow to indicate it is treated as a “hint” versus a directive, so there is a possibility that some nofollow links might be used for ranking.

Site structure (internal linking)

The structure of a site is defined by the internal links setup from the homepage to topic pages to article or leaf pages and everywhere else. A site’s main navigation, footer, contextual linking, topics, content types, archive, and pagination all impact the site structure. External link value can enter a site at any page, but often the homepage of a site has the most external and internal links pointing at it. Link value flows throughout a site via internal links, so it’s important that internal links distribute link value evenly throughout the site, especially to the content pages that can rank well in SERPs.

Think about site structure as “levels” or clicks away from the homepage, also called crawl depth. Use a web crawler to check for crawl depth to determine if you have pages that are far away from the homepage or other entry points. Learn more about crawl depth and one way to improve it with XML and HTML sitemaps.

The pages on a website with the most internal links will be seen as the most important pages on that site. Make sure it’s clear what your most important pages are. Make sure the most important pages to you are also the most important pages to your audience.

4. Keyword / Topic focus

Search engines need to understand the aboutness of a page. This starts with keywords. A keyword is a word or phrase that represents a concept. Search engines publish monthly search volumes on keywords to help content creators understand what people are searching for.

Ranking for high volume keywords is difficult, but if you don’t target some search volume, you’ll be writing for something that no one is searching for.

Keyword research also includes a competitive analysis. Check the search engine result pages for the keywords you are researching! If you are targeting a high volume, high-value keyword you are likely up against some tough competition. Know that you’re going to have to create something better than the top-ranking pages.

As a business, you may think you want to rank for some high volume keyword, but if you don’t understand the user intent of a query (see below), you could be putting in a lot of effort only to attract people who don’t need or want your solution.

Use your research to identify a target keyword and use variations of it in your titles and throughout the content. Make sure your writing sounds natural, not robotic or repetitive. Don’t try to fill the page with irrelevant or unnatural language just to cram keywords in there.

Building on keywords, a topic encompasses multiple keywords and sub-topics. In the early days of search engines, it was important to focus on a specific keyword for each page, but with advancements in natural language processing, search engines better understand the concept of a page and the meaning of a query. Search engines have scanned enormous corpora of text to build entities so they understand how words and things are connected, clustered, and related to each other. A page can now rank for hundreds or thousands of keywords even if not specifically mentioned on the page.

One factor in how well a page ranks is how completely it covers the topic. Understand the sub-topics that are covered (or should be covered) on a topic and ensure that you have the most comprehensive page around.

The converse of this point is that a page should not cover a variety of topics. One URL should represent one concept or topic (including sub-topics) so the page can be understood as a resource or answer to a search query. A search engine will have difficulty understanding what a page is about if there are many different topics covered on the same page or URL.

Keyword Research Tools

Check out this list of free keyword research tools, and another list of top keyword tools.

5. User Intent

Explicit and Implied intent

User intent is the specific goal a user has in mind when using a search engine. Intent can be explicit or implied. If the intent is included in the query, like “buy board games” it’s an explicit or clear intent that the user wants to make a purchase.

Many queries have an implied intent. Consider the query: “red wine stain.” It probably seems clear someone wants information on cleaning a red wine stain. This is implied intent and you can see that in the search results.

Machine Learning informs User Intent

Google uses machine learning on large data sets to understand implied intent at scale. Tracking what kind of results people are more often clicking on, they can predict user intents that are not explicitly stated.

Understanding how Google interprets user intent is as simple as checking the search results. For example, search for “board games” and you will see stores that sell board games because this query has a high intent for shopping or buying. Notice there are some lists of "best board games" ranking because the query also implies the user hasn’t decided which game to buy yet.

Test variations of queries and review the results to better understand varied user intents. If you want your web page to rank for a query, you have to align with the primary user intent(s).

Do, Know, Go

Google refers to the most basic user intents in their Quality Raters Guidelines as “Do, Know, Go”:

- Do: Transactional (want to do or buy something)

- Know: Informational (what is this? or how does this work?)

- Go: Navigational (where is the website? where is something located? Also referred to as “website” intent)

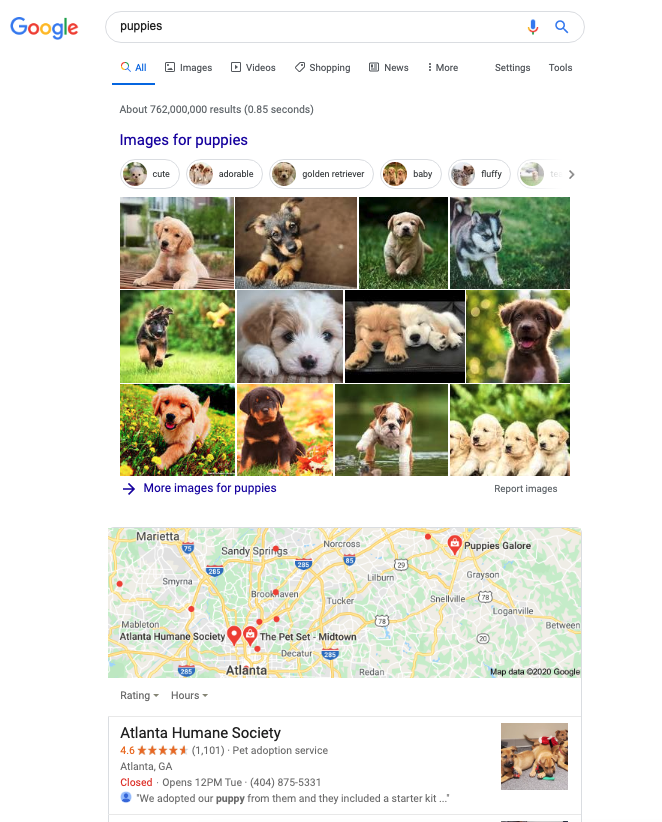

Additional SERP features are shown when an intent for a feature is high. Searches that indicate a need for a local business or landmarks near the searcher get “local intent” and show Google Map packs. A search for “lab puppies” usually shows a prominent image pack because the intent for photos is high. Many queries have high intent for videos and the video carousel is shown. Understanding where these are happening can help develop a successful content strategy.

Fractured Intent

In many cases, a query may have many different intents, this is called “fractured intent”. Google will present a variety of user intents for a query that is not clear cut. This is most common on broad queries like “dog,” where you will see guides and questions on caring for dogs, videos of dogs, list of dog breeds, and general information about dogs.

Compare the type of results for the similar query “puppies,” where user intent appears to be more about adopting a puppy, and cute photos.

Natural Language Processing Advancements

To understand user intent, a machine must first understand how the words in a query relate to each other. Natural language processing (NLP) allows computers to understand the subject, predicate, and object (SPO) and more complex word relationships, meanings, modifiers, and entities. A similar set of words in a different context or order can mean entirely different things:

- A car leaves its shed.

- A tree shed its leaves.

Google’s launch of the Hummingbird Algorithm helped them understand “conversational search” or semantic search much better. It allows the search engine to understand the meaning behind keywords or phrases via context and logic.

Google’s Knowledge Graph allowed them to understand how things (or entities) are connected. It’s a database of facts on people, places, and things, how they relate to each other, and what those relationships mean. Google announced the launch of their Topic Layer within their Knowledge Graph which is “engineered to deeply understand a topic space and how interests can develop over time as familiarity and expertise grow.”

Google can understand what a user wants when they type in a query like, “What’s that movie about a tiger in a boat?” Google can understand the sentance structure and apply their knowledge graph around movies and entities (things) within movies.

Creating content designed to fulfill user intent is about putting the user first. Create content to meet the searcher’s needs, not yours. People respond positively when you help them accomplish their goals!

6. User Experience (UX)

What is user experience in relation to a system, website, or app?

User Experience is all the feelings and perceptions that a user has when using a system or product. It comprises every step a user must take to interact with or manipulate a system to accomplish a goal successfully. User experience is a perception, and perceptions are subjective. Each person has a unique set of personal experiences that shape how they interact with the world, but creating a great user experience is about identifying the shared needs and practices of specific user groups. Designing an experience that is recognizable and meets expectations should be the goal. The ideal experience with a system or product is clear, easy to use, efficient, comfortable, and ultimately provides value.

General user experience tips

Don’t reinvent the wheel, use common elements that are recognizable. Make sure important elements are prominent. Highlight where attention is needed. Create organizational hierarchies from simple to complex or broad to specific. Understand different goals, paths, and tasks users might want to complete. Don’t put blockers in the way of completing a task, like blocking or distracting the reader from the main content on the page. Visualize the steps it takes to perform a task, find ways to reduce steps and make it quicker to finish. Reduce friction, objections, or issues that might slow down or de-motivate a user to complete a task.

What user experience is not

User experience is NOT about making the user do what you want them to do. If the only goal is to push people into signing up or buying, it may not result in a positive user experience. Understand what the user wants, give them that. Solve your marketing goals by removing barriers, expanding options, offering enticement, and providing knowledge and context.

How to assess the “search experience”

It almost goes without saying that search engines care about the search user’s experience. Take the time to see for yourself what the search experience looks like for your audience: Enter a search query, find a relevant result, visit the page, scan and read the visited page, accomplish a task on the page, and discover related content on the same site that aligns to the user intent.

Engagement, perhaps the most powerful signal

Engagement /inˈɡājmənt/ : the act of engaging : the state of being engaged \

Engaging: tending to draw favorable attention or interest,

Engaged: involved in activity

The most powerful, attractive content is engaging. It creates interest and activity. What are some qualities of engaging website content?

- Interactive

- Interesting

- Unique

- Visually pleasing

- Easy to read

- Completes tasks

- Solves problems

- Delightful

Create content that users can interact with, click on, select options, customize their view, discover and learn more. An article can engage, or have engaging elements added to it, but try to go beyond just an article. Think about other types of content like quizzes, polls, surveys, tools, calculators, interactive infographics, contests, challenges, awards and discussions (forum, comments, live blogging, AMA).

People will naturally want to share engaging, delightful content with their friends and colleagues. Content with high engagement will always drive more amplification signals (links and shares).

Website speed is part of user experience

Web page loading time is also a factor in user experience. Much emphasis has been put on optimizing website speed since Google announced it was a light-weight ranking factor in 2010. An update in 2018 brought mobile web page speed into consideration, whereas previously only the desktop version of the site was considered.

Google continues to highlight the update “will only affect pages that deliver the slowest experience to users and will only affect a small percentage of queries … The intent of the search query is still a very strong signal, so **a slow page may still rank highly if it has great, relevant content.**” Regardless, optimizing speed on a website can help with user engagement, and the importance of this ranking factor will grow over time.

If you are wondering why your pages are not ranking high, don’t start by blaming page speed. It’s much more likely that you need to build higher quality content that delivers on user intent.

Google’s PageSpeed Insights tool tests how fast your pages are, and identifies places you can improve speed. Another great tool for testing website performance is WebPageTest.

Error pages affect user experience

Broken links create bad user experiences. Check your page after publishing, don't accidentally create bad links. The Internet is a constantly changing system and over several years pages may be retired or broken.

Google Search Console reports these as Index coverage errors, or you can crawl your own site to discover any broken links, internal or external. Don’t let your site fall into disrepair, check links and fix them if they stop working. Maintain your pages for the long term and setup redirection if changing your own URLs. That will help reduce errors as well as maintain external link value the site has built up.

Mobile device usability factors

Google understands that people are using different devices to search online. Websites should be built to work well on large monitors as well as small screens. In 2015 mobile phone searches outpaced desktop computer searches, settling in around a 60 /40 split (mobile/desktop). That same year, Google launched the “mobile-friendly update” which ranked websites with good mobile experiences higher in mobile search.

More recently, in 2018, Google moved to “mobile-first indexing” where they crawl and index websites using a smartphone agent to better understand the experience of a page on a mobile phone.

While overall search volume is higher for mobile devices vs desktop computers, for many B2B websites, the desktop/laptop computer still represents more of their traffic because people tend to use laptops and desktop computers with larger screens at their place of work. It’s worth checking which devices are accessing your website more often. If you are using Google Analytics, you can find this report in Audience > Mobile > Overview.

Google recommends a responsive website that stretches and fits different size monitors while maintaining the same page code for all. A responsive site will use CSS to restyle the page template at different viewports or screen widths.

The most important thing to understand in mobile-first indexing is maintaining parity between the mobile and desktop versions. Google might be missing out on important information if your mobile page provides a different experience or shows less content or links than the desktop version. Make sure to follow all of Google’s best practices for mobile-first indexing.

7. Fresh content

More often than not, people are searching for recently published content on a subject. Older content eventually goes stale, references become dated, ideas are updated and progress moves on. This is not to say that old content is a negative, but a website that is not publishing new content at a regular pace will be viewed as less authoritative compared to a website that is publishing new, quality content at a quick pace.

Breaking news!

Just like in the old days, the publisher who gets the scoop on the news is going to win the authority with their audience. Google has designed their algorithms specifically to notice and value fresh content.

Websites that can keep content production high while maintaining quality will naturally establish themselves as an authority in their field. Be first to publish new developments, capitalize on the trends happening right now because tomorrow it’s going to be something new.

Even if your business is not in publishing news, you can take advantage of trending topics by aligning your content to breaking news, seasonal/holiday trends, or big events. For the latter two: seasonal and events, plan your content ahead of time and be ready to publish at the right time.

Community FTW!

Some websites create fresh content by encouraging user-generated content (UGC). This can be user reviews, ratings, videos, or questions and answers. Social media sites and forum sites create a lot of UGC. Websites that value their community and encourage interaction get a natural SEO boost. Google values engagement because people value it.

Maintaining an active community is not easy to do, but here’s a few tips:

- Make sure to monitor and clean up spam.

- Solve disputes and keep interactions positive.

- Users are generally more interested to join a discussion online that’s already happening, so you need conversation starters.

- Find ways to “seed” engaging discussions.

- Identify the “power users” in your community who post often, want to help, and start conversations. Cultivate a relationship with active users and empower them to help maintain a friendly community.

Freshness algorithm

Google announced in 2011 they have algorithms that identify “query deserves freshness” (QDF). This means they can use mathematical models to identify queries where more recently published content should be favored over older content.

8. Click-through rate (CTR)

What is CTR?

Click-through rate is the percentage of users that click on your search result after it’s shown to them. Impressions is the number of times a result has been shown. The formula for CTR is:

Clicks ÷ Impressions = CTR

Disclaimer: Google representatives have stated many times that CTR is not a ranking signal. They have said it is not a direct ranking factor, but could it be an indirect ranking factor? Does it really matter?

It makes sense that Google can’t use CTR as a direct ranking factor, because it would be too easy to game that signal: Just hire millions of people to click on your search results! Spoiler alert, that doesn’t work.

Even if click-through rate is not considered in any way by Google, your website still depends on people clicking. Clicks are traffic. Google is using this data to inform their search results in some way, and if you can find ways to improve your CTR, you should be doing that to improve your site experience.

Improving CTR

If you want to improve your click-through rate, you need to improve how your page is being featured in the search results. There are a few ways you to do this:

- Meta title and meta description improvements

- Choose short, readable URLs

- Freshness, date of publication

- Structured data, to get a rich result

- Appearing in other search features, snippets, knowledge boxes, packs, or carousels

- Use relevant, engaging images

Position or rank in the search result is the most important factor in determining CTR.

Average CTR can be determined by looking at large datasets. CTR distribution in a SERP is generally the same query to query, but there are some differences between mobile and desktop and between different types of queries. With all the different search features, branded vs non-branded, it can be tricky to understand average CTRs. AdvancedWebRanking has a great resource you can use to find average CTRs by ranking position for a variety of situations.

Looking at overall organic CTR, it’s interesting to see how quickly CTR drops:

| Rank | Click-through Rate |

| 1 | 30% |

| 2 | 13% |

| 3 | 7% |

| 4 | 4% |

| 5 | 3% |

| 6 | 2% |

| 7 | 2% |

If you can beat the average CTR for your ranking, that’s your best case scenario. That is one small factor of many that can indicate your result deserves a higher ranking.

There are several studies and observations over the years that correlate CTRs with higher rankings. Everyone concludes that it’s worth the effort to improve CTR for traffic.

- Is CTR a ranking factor? (Dan Taylor)

- Queries & Clicks May Influence Google's Results (Rand Fishkin)

- Is Click Through Rate A Ranking Signal? (Aj Kohn)

9. Expertise, Authoritativeness, Trustworthiness (E-A-T)

E-A-T is a concept pioneered by Google in its Quality Rater’s Guidelines (QRG). This document is used to help Google’s teams of quality raters managed by third party companies. Quality raters are trained to judge and score the quality of webpages in relation to search rankings.

Quality ratings are not used to create Google’s algorithms but rather to test recent algorithm changes. In essence, they describe how to be a high-quality webpage. These are must-read guidelines that help us understand the ranking system that Google wants to create.

E-A-T overlaps with a lot of the other factors listed above, it’s an important concept to understand for SEO, even if it’s not a direct ranking factor. Google doesn’t have an E-A-T score, but working to improve in these areas is going to result in better quality content that scores higher in the thousands of “baby algorithms” working together.

Google explains how they use E-A-T signals in their whitepaper, How Google Fights Disinformation Online (See page 12, “How do Google’s algorithms assess expertise, authority, and trustworthiness?”).

Many of Google’s major algorithm updates attempt to value quality content better. Back in 2011, when their Panda update launched, content quality became a big focus. Google recommended thinking about several questions related to E-A-T. Google updated its advice with fresh questions about quality in a recent 2019 post about core algorithm updates. These must be important. Study these questions and take a critical look at your website, you might need to ask someone else to answer them for you.

Expertise

Google knows that people are searching for expert-level content. Expertise can be partly judged by how websites reference your content (links). Algorithms can value an author for expertise in certain topics, due to how much an author writes about a topic and the popularity of those articles. A prolific, expert author may find it’s easier to gain search rankings the more they write. Make sure to provide a search engine with robust information about authors, qualifications, experience, social media, and contact info. Depending on the information available, it is possible to connect an author to all the content they’ve written across different websites.

There are some topics that Google feels require a very high level of expertise to rank well. They call these topics “Your Money or Your Life” (YMYL) topics. Sites that provide legal, health, or financial advice are going to be held to a higher standard of expertise. More recent algorithm updates have been made to strengthen this signal, especially for websites publishing health information.

Authoritativeness

Authority is mostly about links. The more that other websites link to a site, the more popular that site will appear to Google. It’s similar to any popularity contest for people, authority tends to rise the more that a person is mentioned.

Trustworthiness

Measuring trust on the Internet can be a tricky thing, but there are a few ways that a search engine can leverage data for trustworthiness. Nearness to a trusted set of websites can be used to measure trustworthiness. The concept is that trustworthy sites generally link to other trustworthy sites, and untrustworthy sites don’t get links from trustworthy sites. A trusted set of websites can be selected by hand, or by algorithm -- what characteristics are common for sites we know to be trustworthy?

The other signal that a search engine can measure is sentiment. An untrustworthy site might have a lot of links pointing to it, but the context around those links might be very negative. The old adage still rings true, any publicity is good publicity, but Google has made attempts to devalue websites that promote negative or fraudulent experiences.

What is Quality Content?

When we talk about the kind of page that ranks well in search engines, the words “quality content” are often used, but exactly what do we mean by quality content? If you take all the 9 factors above, these cover most of what is considered quality content.

- Technical quality - accessible, no errors, no mistakes

- Unique - useful and informative content, not duplicative content

- Links - like votes of approval, indicators of quality

- Topical coverage - comprehensive understanding of sub-topics

- Be the resource that solves the problem the best

- Make the experience delightful - engaging, good design, no unwanted experiences

- New content, updated content, timely, fresh, high production over time

- Makes people want to click and keep clicking to more

- Expert level - created by an author with experience on the topic, citations, references

If you want to dive deeper into this topic, read this incredible resource on quality content by Patrick Stox.

10. Search engine guidelines

Google owns 92% of the international search engine market, so following their guidelines is of utmost importance. These kind of wrap everything up, don’t break the rules or you’re out!

We’ve already covered most of these general guidelines above, but it’s worthwhile to see how Google talks about these. This is a summary version of Google’s webmaster guidelines:

- Help Google find your pages

- Link to pages

- Use a sitemap, XML and HTML are both good

- Limit number of links per page to a reasonable number (~2000 max)

- Don’t allow infinite spaces to be crawled by Googlebot

- Help Google Understand your pages

- Create information-rich (text, formatting, meta data) pages

- Think about keywords people would use to find your pages. Include those in your title and alt attributes should be descriptive, accurate and specific

- Design a website with a clear hierarchy, or organization

- Follow best practices for image, video and structured data

- Make sure your CMS (WordPress, Wix, etc) creates pages and links that search engines can crawl

- Make sure site resources that affect the rendering of the page (JS, CSS) are crawlable

- Make sure search engine crawlers don’t have to process many sessions IDs or URL parameters

- Make important content visible by default. Content hidden behind tabs or expandable sections will be seen as less important.

- Make sure advertising links on your site do not affect search rankings by using robots.txt, rel= "nofollow", or rel= "sponsored"

- Help visitors use your pages

- Use text instead of images to display important names, content or links.

- Make sure all links go to live web pages.

- Optimize webpages for speed.

Make sure to continue reading through all the general guidelines, content-specific guidelines, and maybe most important of all, the quality guidelines, which tell you what not to do. You will be penalized by Google if you break these guidelines. For developers, check out Get started with Search: a developer’s guide.

Google has publicly released its Quality Rating Guidelines, which are very helpful in understanding what to do to create quality pages. Think of these guidelines as how Google wants its search engine to identify quality. Read these very carefully!

Stay up-to-date with new announcements from Google at their Search Central Blog and @googlewmc. Read between the lines. Challenge everyone’s theories. Understand how search engines work. Think like a search engine.

Follow Google representatives, they give the best clues about how to rank well in search.

John Meuller - @JohnMu

- AskGoogleWebmasters

- Google Search Central Live and SEO Office Hours

- Google Webmaster Central Hangout notes (prev name of Office Hours (DeepCrawl)

- John Meuller Reddit AMA (Mar 28, 2018).

- John Meuller Interview Takeaways by Tom Capper

Gary Illyes - @methode

- Gary Illyes Reddit AMA (Feb 07, 2019)

Martin Splitt - @g33konaut

Danny Sullivan - @searchliaison

Why is SEO important?

Improving online presence is vital for increasing brand awareness. Putting forth the effort to create a great website experience will benefit your business in the long run. The results of SEO are long-lasting and sustainable.

As you build on your efforts, and your authority grows, you will find it becomes easier to gain new rankings over time because your powerful domain and website distributes authority to all your pages.

“Never lose sight of the fact that all SEO ranking signals revolve around content of some kind.” — Duane Forrester

Your website is your castle. Your content is the moat around your castle. The wider (more comprehensive) and deeper (higher quality) this moat is, the harder it will be for a competitor to take you over.

As your search visibility grows, you will build credibility with users who will see your brand as a thought leader in the space. This ultimately builds trust, which is perhaps the biggest factor for encouraging people to become new customers.

Further Reading

- Google: How Search algorithms work

- Moz - Free SEO Learning Center [2020]

- Stanford CS - Google Page Rank Algorithm (Introduction level)

- PageRank and The Random Surfer Model (Advanced math on PageRank)