The search engine’s mission is to catalog the entire Internet, and they need to do it quickly and efficiently. The size and scale of the entire Internet is massive. How many websites and how many pages are there? Way back in 2008, Google hit a milestone of 1 trillion pages crawled on the Internet. By 2013, Google was crawling around 30 trillion pages. Less than 4 years later, Google knew of 130 trillion pages. The rate of growth is staggering, and it is no small feat to discover all these pages.

If Google has trouble crawling or indexing your site, it will never make it into the search engine. Understanding how Google crawls and indexes all the websites on the Internet is critical to your SEO efforts.

What is crawling? What’s a web crawler?

Crawling refers to following the links on a page to new pages, and continuing to find and follow links on new pages to other new pages.

A web crawler is a software program that follows all the links on a page, leading to new pages, and continues that process until it has no more new links or pages to crawl.

Web crawlers are known by different names: robots, spiders, search engine bots, or just “bots” for short. They are called robots because they have an assigned job to do, travel from link to link, and capture each page’s information. Unfortunately, If you envisioned an actual robot 🤖 with metal plates and arms, that’s not what these robots look like. Google’s web crawler is named Googlebot.

The process of crawling needs to start somewhere. Google uses an initial “seed list” of trusted websites that tend to link to many other sites. They also use lists of sites they’ve seen in past crawls as well as sitemaps submitted by website owners.

Crawling the Internet is a continual process for a search engine. It never really stops. It’s important for search engines to find new pages published or updates to old pages. They don’t want to waste time and resources on pages that are not good candidates for a search result.

Google prioritizes crawling pages that are:

- Popular (linked to often)

- High quality

- Frequently updated

Websites that publish new, quality content get higher priority.

What is crawl budget?

Crawl budget is the number of pages or requests that Google will crawl for a website over a period of time. The number of pages budgeted depends on: size, popularity, quality, updates, and speed of the site.

If your website is wasting crawling resources, your crawl budget will diminish, and pages will be crawled less frequently — resulting in lower rankings. A website can unintentionally waste web crawler resources by serving up too many low-value-add URLs to a crawler. This includes “faceted navigation, on-site duplicate content, soft error pages, hacked pages, infinite spaces and proxies, low quality, and spam content.”

Google identifies websites to crawl more frequently but does not allow a website to pay for better crawling. A website can opt-out of crawling or restrict crawling of parts of the site with directives in a robots.txt file. These rules tell search engine web crawlers which parts of the website they are allowed to crawl and which they cannot. Be very careful with robots.txt. It’s easy to unintentionally block Google from all pages on a website. The disallow commands match all URL paths that start with the path indicated:

Disallow: /

[blocks crawling the entire site]

Disallow: /login/

[blocks crawling every URL in the directory /login/]

See Google’s support page for robots.txt if you need more help with creating specific rules.

The robots.txt disallow command only blocks crawling of a page. The URL can still be indexed if Google discovers a link to the disallowed page. Google can include the URL and anchor text of links to the page in their search results, but wouldn’t have the page’s content.

If you don’t want a page included in Google’s search engine results, you must add a noindex tag to the page (and allow Google to see that tag). This concept shows us the distinction between crawling and indexing.

What is indexing?

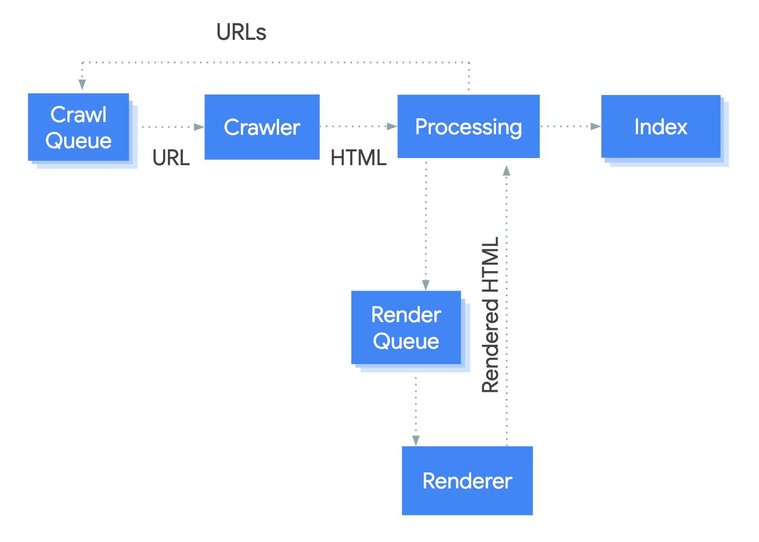

Indexing is storing and organizing the information found on the pages. The bot renders the code on the page in the same way a browser does. It catalogs all the content, links, and metadata on the page.

Indexing requires a massive amount of computer resources, and it’s not just data storage. It takes an enormous amount of computing resources to render millions of web pages. You may have noticed this if you open too many browser tabs!

What is rendering?

Rendering is interpreting the HTML, CSS, and javascript on the page to build the visual representation of exactly what you see in your web browser. A web browser renders code into a web page.

The rendering of HTML code takes computer processing power. If your pages rely on javascript to render the page’s content, it takes an enormous amount of processing. While Google can crawl and render javascript pages, the JS rendering will go to a prioritization queue. Depending on the importance of the page, it could take a while to get to it. If you have a really large website that requires javascript to render the content on the pages, it can take a long time to get new or updated pages indexed. It’s recommended to serve content and links in HTML rather than javascript if possible.

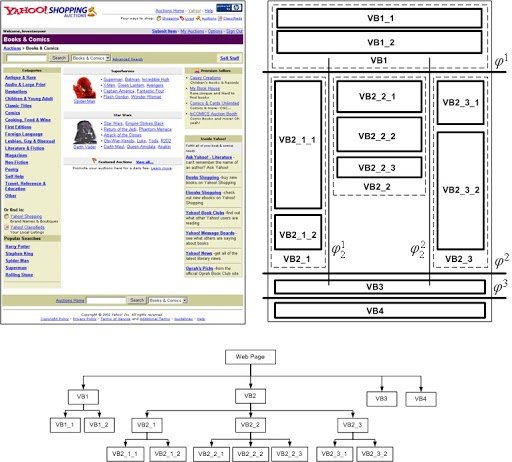

Block-level analysis (page segmentation)

Page segmentation or block-level analysis allows a search engine to understand the different parts of the page: navigation, ads, content, footer, etc. From there, the algorithm can identify which part of the page contains the most important information or primary content. This helps the search engine understand what the page is about and not get confused by anything else around it.

Google also uses this understanding to demote low-quality experiences, like too many ads on a page or not enough content above-the-fold.

A technical research paper published by Microsoft outlines how different sections on a webpage can be understood by an algorithm.

Page segmentation is also useful for link analysis.

Traditionally different links in a page are treated identically. The basic assumption of link analysis is that if there is a link between two pages, there is some relationship between the two whole pages. But in most cases, a link from page A to page B just indicates that there might be some relationship between some certain part of page A and some certain part of page B.

</figure>

<p style="text-align: right">

[<a href="https://www.microsoft.com/en-us/research/publication/vips-a-vision-based-page-segmentation-algorithm/">source</a>]</p>

This type of analysis allows a contextual link within a large content block to have more weight (or value) than links appearing in navigation, footer, or a sidebar. The relevance of a link can be judged by what’s around it on the page and where it’s found on the page.

Google also has patents on page segmentation looking at visual gaps or white space in the rendered page. These examples show what a search engine can accomplish with sophisticated algorithms.

What is the difference between crawling and indexing?

Crawling is the discovery of pages and links that lead to more pages. Indexing is storing, analyzing, and organizing the content and connections between pages. There are parts of indexing that help inform how a search engine crawls.

What can you do with organized data?

Google says their search index “contains hundreds of billions of webpages and is well over 100,000,000 gigabytes in size.” The indexing process identifies every word on the page and adds the webpage to the entry for every word or phrase it contains. It’s like a giant appendix. The search engine takes note of the signals they find, contextual clues, links, behavioral data, and determines how relevant the entire page is for each word it contains.

Knowledge Graph

Google used its massive database of information to build its Knowledge Graph. It uses the data found to map out entities or things. Facts are connected to things, and connections are made between things. A movie contains characters that are connected to a book written by an author who has a family with other connections, and so on. In 2012, Google said it contained more than 500 million objects and over 3.5 billion facts about the relationships between the objects. The facts collected and displayed for each entity are driven by the types of searches Google sees for different things.

The Knowledge Graph can also clear up confusion between things with the same name. A search for “Taj Mahal” could be a request for information about the architectural world wonder, or it could be for the now-defunct Taj Mahal casino, or possibly the local Indian restaurant down the street.

Conversational Search

Long ago, when Google first started, they returned search results that always contained the words someone had searched. The search results were simply matching keywords searched to the same keywords found in documents on the Internet. The meaning behind a search query was not understood, so it was difficult for Google to answer queries in the form of questions. In 1999, we were used to phrasing our search queries as keywords to get satisfactory results, but that has changed over the years.

Google invested in natural language processing algorithms to understand how words modified each other and what a query actually meant beyond merely matching words.

In 2012, Google launched “conversational search” capabilities made possible by the Knowledge Graph. In 2013, Google launched the Hummingbird algorithm, which was a major improvement that allowed Google to process the semantics or meaning of each word in every search query.

Importance of crawling and indexing for your website

- Don’t unintentionally block your website from Google

- Check and fix errors on your website

- Check Google’s index, make sure your page appears the way you want them to

This is where your search engine optimization starts. If Google can’t crawl your website, you won’t be included in any search results. Make sure to check robots.txt. A technical SEO review of your website should reveal any other issues with search engine web crawler accessibility.

If your website is overloaded with errors or low-quality pages, Google could get the impression that the site is mostly useless junk pages. Coding errors, CMS settings, or hacked pages can send Googlebot down a path of poor-quality pages. When poor quality outweighs high-quality pages on a website, search rankings suffer.

How to check for crawling and indexing issues

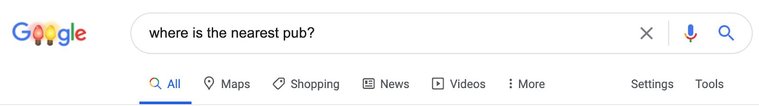

Google Search

You can see how Google is indexing your website with the command “site:” — a special search operator. Enter this into Google’s search box to see all the pages they have indexed on your website:

site:yourdomain.com

You can check for all the pages that share the same directory (or path) on your site if you include that in the search query:

site:yourdomain.com/blog/

You can combine “site:” with “inurl:” and also use the negative sign to remove matches to get more granular results.

site:yourdomain.com -site:support.yourdomain.com inurl:2019

Check that the titles and descriptions are indexed in a way that provides the best experience. Make sure there are no unexpected, weird pages or something indexed that should not be.

Google Search Console

If you have a website, you need to verify your website with Google Search Console. The data provided here is invaluable.

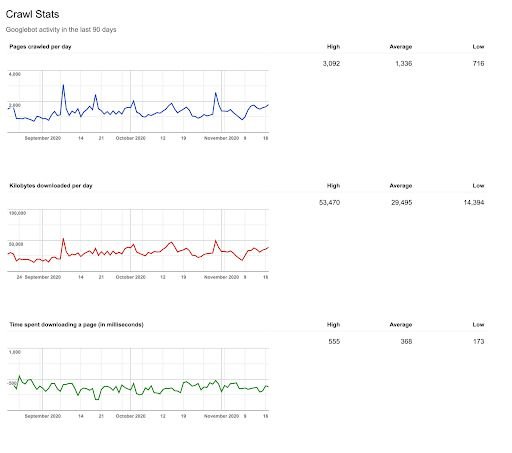

Google provides reports on search ranking performance: impressions and clicks by page, country, or device type, up to 16 months of data. Under the Index Coverage reports, you can find any errors that Google has found. There are many other helpful reports related to structured data, site speed, and how Google indexes your website. The Crawl Stats report can be found in the Legacy Reports (for now). This will give you a good idea of how Google is crawling your website over time, fast or slow, many pages or few.

Use a web crawler

Try using a web crawler to get a better idea how a search engine crawls your website. There are several options available for free. Screaming Frog is one of the most popular, has a great interface, tons of features, and allows crawling up to 500 pages for free. Sitebulb is another great option for a full-featured web crawler with a more visual approach to the data presented. Xenu’s Link Sleuth is an older web crawler, but it’s completely free. Xenu doesn’t have as many features to help identify SEO issues, but it can quickly crawl large websites and check status codes and which pages link to which other pages.

Server Log Analysis

When it comes to understanding how Googlebot is crawling your website, nothing is better than server logs. A web server can be configured to save log files that contain every request or hit by any user agent. This includes people requesting web pages via their browser and any web crawlers like Googlebot. You won’t get information on how search engine crawlers are experiencing your website from Web Analytics applications like Google Analytics because web crawlers generally don’t fire javascript analytics tags, or they are filtered out.

Analyzing which pages Google is crawling is very helpful to understand if they are crawling your most important pages. It’s helpful to group pages by type, to see how much crawl is devoted per page type. You might group blog pages, about pages, topic pages, author pages, and search pages. If you see big changes in which page types are crawled, or a heavy concentration on a single page type (to the detriment of others), it can indicate a crawling issue that should be investigated. Spikes in error status codes also indicate obvious crawling issues.

“Any sufficiently advanced technology is indistinguishable from magic.”- Arthur C. Clarke

The ability to crawl the entire Internet and find updates quickly is an incredible feat of engineering. The way Google understands the content of webpages, the connections (links) between pages, and what words actually mean can appear to be magical, but everything is based on the math of computational linguistics and natural language processing. While we may not have a complete understanding of this advanced math and science, we can recognize the capabilities. By crawling and indexing the Internet, Google can derive meaning and quality from measurements and context.